Special Articles on 5G Evolution & 6G (2)—Initiatives toward Implementation and Use Cases—

Video-transmission Processing Platform for Embodying Ultra-low Latency, Uninterrupted Viewing, and Ultra-realistic Feeling

Variable Transmission Rate Control Video Quality Enhancement AI

Ryoko Miyachi, Eisaku Higuchi and Yasuhiko Yamakawa

6G Network Innovation Department

Abstract

The development of high-definition video and high-speed, large-capacity wireless communications in recent years is raising expectations of high-quality video transmission services using wireless communications and their application to social infrastructures. However, a variety of limitations exist in wireless communications such as fluctuations and momentary interruptions in the communication band. NTT DOCOMO is developing a video-transmission processing platform that makes effective use of the communication band to reconcile the expectations for wireless video communications with the limitations of wireless communications. This article describes key functions of this video-transmission processing platform and field experiments applying those functions and discusses the future outlook for video use cases.

01. Introduction

-

The 5th-Generation mobile communications system (5G) ...

Open

The 5th-Generation mobile communications system (5G) launched in March 2020 features high speed and high capacity, high reliability and low latency, and massive device connectivity. The use of services embodying these features continues to expand not only for general consumers but also in a variety of fields including industrial equipment and social infrastructures. Use cases using 5G cover a wide range of applications such as entertainment, security, remote operation, and automated driving, and many of these use cases utilize video, so video services that use wireless communications are expected to expand for both consumers and enterprises and to evolve even further.

Wireless communications, however, suffer from a variety of limitations, such as constant changes in communication speed*1 due to various environmental changes such as fluctuations in the communication band*2 when moving and changes in the communication status of other users on the network. In addition, user communications is increasing in capacity as 5G services spread and expand, so these limitations are expected to become increasingly severe going forward. At present, moreover, there are not a few environments in which sufficient communication speed for transmitting high-quality video cannot be obtained. If an attempt is made to transmit on the network a large volume of data or signals exceeding the communication speed under such limitations, a significant amount of data may be stuck in the network or some of the data may even be lost in the network. Consequently, if the above limitations are not understood and operations not performed appropriately in video services that use wireless communications, there are concerns that interruptions, quality degradation, delay, etc. in the received video will occur hindering the use of the service.

Against the above background, NTT DOCOMO is developing a video-transmission processing platform to reconcile the expectations for wireless video services with the limitations of wireless communications and implementing it on docomo MEC*3. In this article, we first describe two key functions of this platform: one for transmitting video data at an appropriate transmission rate tailored to the communication environment, and the other for providing high-quality video even in an environment in which sufficient communication speed cannot be obtained. We then describe various field experiments applying these functions and discuss the future outlook for video use cases envisioned by NTT DOCOMO.

- Communication speed: The amount of data that can be transmitted and received.

- Communication band: The range of frequencies used for communications.

- docomo MEC: Multi-access Edge Computing (MEC) services provided by NTT DOCOMO that places servers near the user within the NTT DOCOMO 5G core network and provides a virtual machine environment and connection environment to achieve low latency and high security.

-

2.1 Variable Transmission Rate Control based on Communication Speed

Open

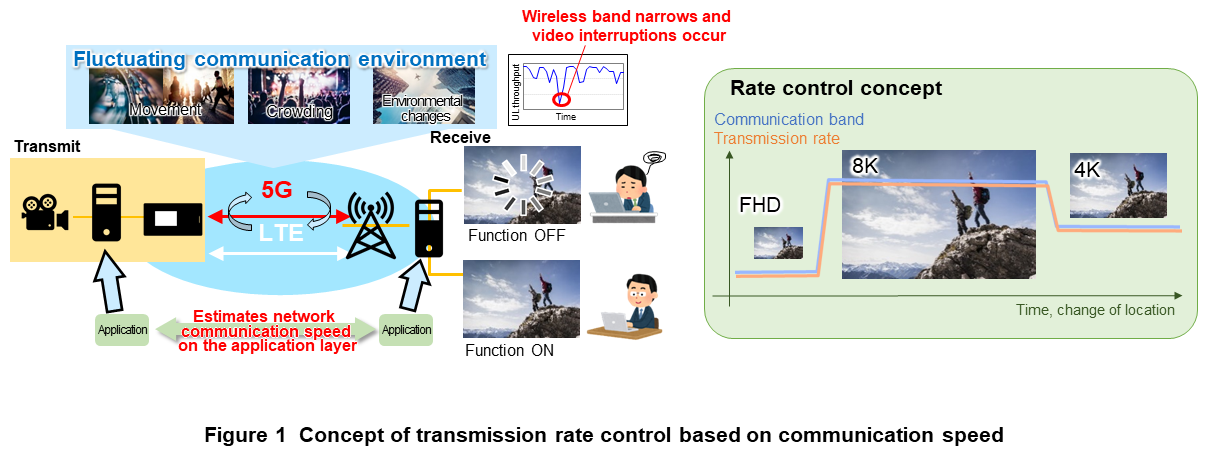

This function estimates the constantly fluctuating network communication speed and appropriately controls the transmission rate to achieve smooth, real-time video transmission that suppresses video interruptions [1][2].

A large quantity of video data will be sent out in the transmission of high-quality video, but if an attempt is made to send data over the communicable speed, data stuck and/or data loss will occur resulting in video interruptions or an increase in delay. This function therefore exchanges various types of information between the applications sending and receiving video data such as Round Trip Time (RTT)*4 and packet loss*5 rate at the time of data transmission and uses this information for estimating the network communication speed in real time in units of milliseconds (Figure 1). Then, when sending out video data on the network, the function dynamically adjusts the video resolution and the compression ratio in video codec processing*6 according to the results of deriving communication speed in the above way. As a result, video data can be transmitted at an appropriate transmission rate even if the communication speed of the network being used should change thereby suppressing video interruptions and increases in delay.

Furthermore, with the aim of improving the accuracy of this function even further, NTT DOCOMO is currently studying a cooperative estimation technique that uses base station information and terminal information (excluding personal information) in addition to the above method. Additionally, to test the effectiveness of this function when applied to various types of video use cases, NTT DOCOMO is conducting field experiments in parallel. Those experiments will be described later in this article.

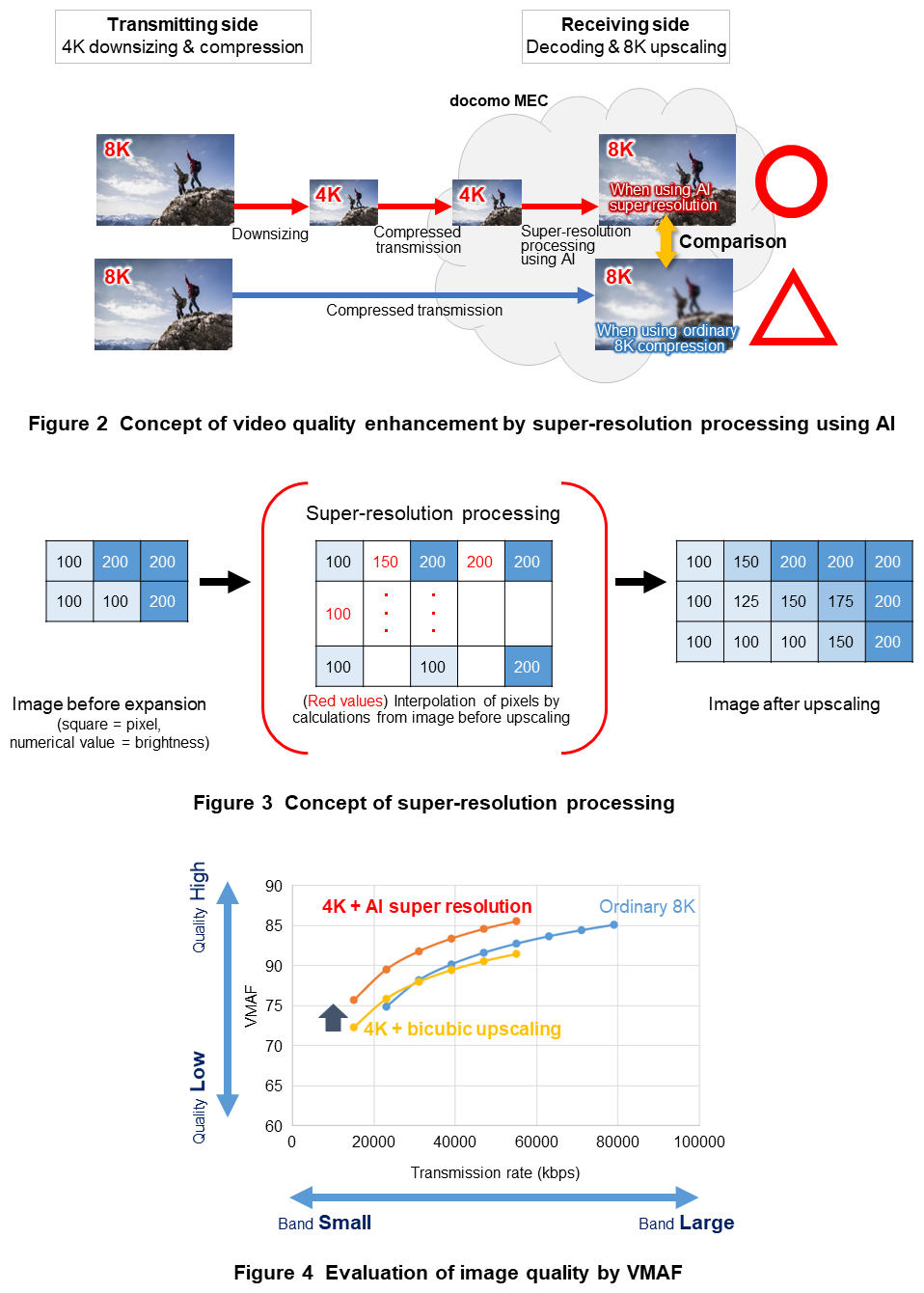

2.2 Video Quality Enhancement Using AI Super Resolution

This function enables the provision of high-quality video equivalent to the originally captured video while keeping a low transmission rate by performing super-resolution processing using AI on low-capacity video data transmitted in compressed form (Figure 2).

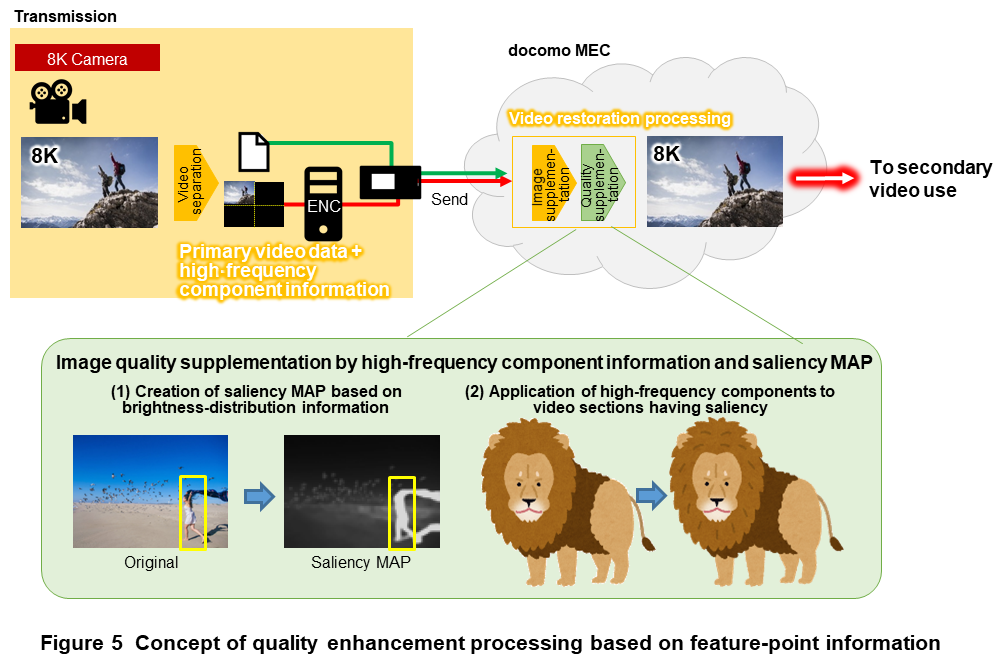

Super-resolution processing is an image processing technology that generates high-resolution images from low-resolution images. As shown in Figure 3, this process generates a high-resolution image by performing calculations to interpolate pixels between surrounding pixels. Traditionally, interpolation methods have included linear expressions and the bicubic method*7, but these interpolation methods may not produce high quality video. On the other hand, using AI in interpolation processing can lead to the generation of higher quality images compared to traditional methods.

Moreover, in video transmission, the transmission rate is an important factor in video quality. Figure 4 shows the results of using an objective evaluation index called Video Multimethod Assessment Fusion (VMAF)*8 [3] to evaluate the quality of received video when transmitting video at different transmission rates for different resolutions and image-processing systems. Here, a higher VMAF value means higher image quality and sharp video. From these evaluation results, it is evident that transmitting 4K video at a low compression ratio and using AI super-resolution to upscale it to 8K video yields a sharper video quality in comparison to transmitting 8K video as-is and compressing that data at a high compression ratio, especially when operating at a low transmission rate.

Based on these results, NTT DOCOMO enables the provision of high-quality video while keeping a low transmission rate in an environment in which sufficient communication speed cannot be insured by transmitting video data in compressed form after lowering the resolution and performing AI super-resolution on that data on docomo MEC.

On the other hand, super-resolution processing using AI suffers from two key problems, a large processing load and a processing delay. Achieving real-time video transmission requires a reduction in processing load and low-latency operation. NTT DOCOMO has addressed these issues by coordinating with an algorithm that automatically specifies priority areas taking video characteristics into account and a recognition function that uses AI to define the areas in which super-resolution processing should be prioritized and performed. This approach reduces processing load and achieves low latency operation.

2.3 Video Quality Enhancement based on Feature-point Information

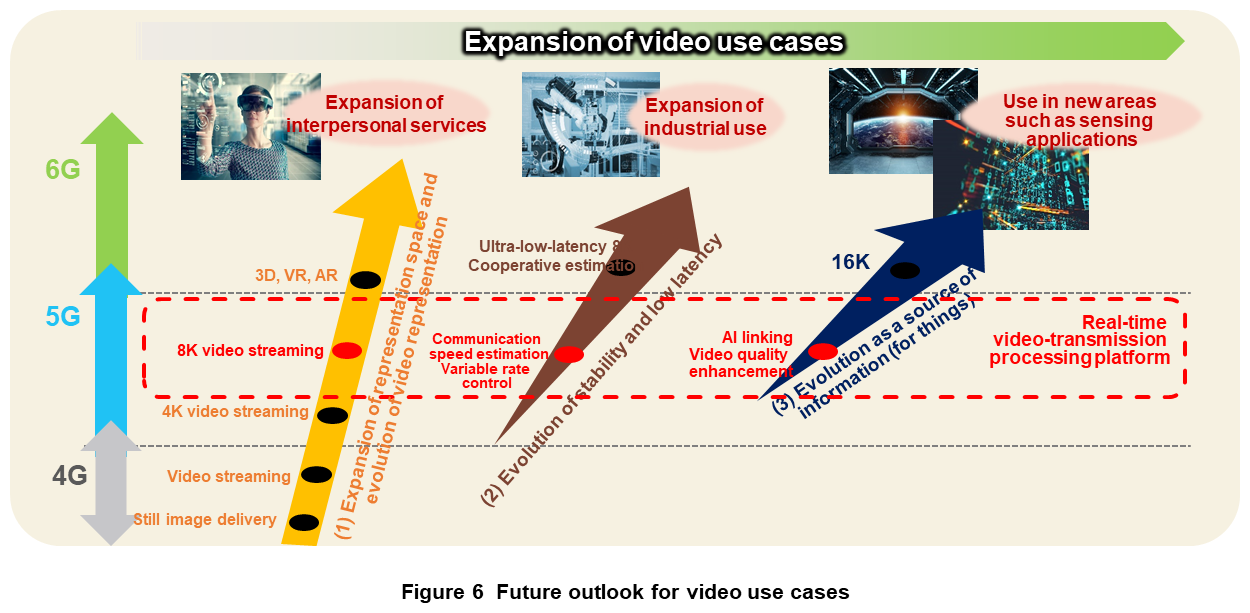

Similar to the function described above that enhances video quality through AI super-resolution processing, this function likewise enhances the quality of low-capacity video data transmitted in compressed form, but it does so by reproducing high-quality video based on feature-point information extracted beforehand from the video data.

This function first separates the video data to be transmitted into primary video data and high-frequency component information*9 on the video transmission side (Figure 5). In ordinary video transmission, video data is sent out onto the network after codec processing, but when data is subjected to quantization*10, high-frequency component information is deleted to reduce the amount of information resulting in a significant loss in video texture. This function addresses this issue by extracting the high-frequency component information beforehand, downsizing the resolution of only the remaining primary video data in accordance with the communication environment, and transmitting both after codec processing. Then, after upscaling the resolution of the received video on docomo MEC and returning the video to its original resolution, the function performs ringing reduction*11 and jaggy reduction*12 processing against the video degraded by codec processing at the time of video transmission. The function also performs contour correction by an enhance function*13 and reproduces the video texture by applying the high-frequency component information. At this time, video with a sense of presence can be reproduced by using the YCrCb*14 information of the received video to generate a saliency MAP*15, which is used, in turn, to judge the shading information of color and adjust the intensity of the high-frequency components.

- RTT: The round-trip time from transmitting data to receiving a response in return.

- Packet loss: The vanishing of some or all data without being delivered.

- Codec processing: The compression or decoding of video or audio data using an encoding scheme.

- Bicubic method: A technique for interpolating a pixel value by a cubic function using 4x4 pixels surrounding the target pixel.

- VMAF: A machine-learning-based image-quality evaluation index that can evaluate image quality closer to human subjectivity.

- High-frequency component information: The high-frequency components among the spatial frequency components in an image. The portions of an image with intense variation include many high-frequency components.

- Quantization: A process of mapping input values to a smaller set of predetermined discrete values. While resulting in some distortion, quantization can significantly reduce the amount of information.

- Ringing reduction: A video processing method for making vivid video by removing contour noise in high-contrast portions of the image.

- Jaggy reduction: A video processing method for smoothing out contour by removing stair-shaped jaggedness appearing in lines or contours.

- Enhance function: A video processing method for making clear and distinct video by emphasizing contours.

- YCrCb: The quantifying of brightness and color-difference information for expressing color.

- Saliency MAP: A video processing method that performs mapping by applying binarization to the input image by a special method and extracting portions of the image having features from information such as color shading.

-

The functions described above enable the provision of ...

Open

The functions described above enable the provision of high-quality video under a variety of communication limitations, but their usefulness must be tested only after correctly understanding the actual conditions of individual use cases. For this reason, NTT DOCOMO has been conducting a variety of field experiments using these functions. The following introduces several of these experiments including some that are currently being planned.

1) Remote Monitoring of Self-driving Vehicles and Remote Operation of Vehicles

The development of automated driving technology and field experiments of that technology are accelerating in countries around the world with the aim of creating a safe and secure society with no accidents, easing congestion, providing an efficient means of transport, and easing the load on drivers. In Japan, as well, revision of the Road Traffic Act in 2022 removed the ban on Level 4 automated driving, i.e., the operation of a vehicle by an automated driving system without driver intervention under certain conditions such as the introduction of remote monitoring. In Level 4, the remote monitoring of the vehicle and its peripheral environment is indispensable for safe vehicle operation, and there is also a need for operating the vehicle from a remote location to deal with unforeseen circumstances. In these cases, the ability to continuously transmit video data with low latency in a stable manner is an important requirement so that video of the vehicle's surroundings from the viewpoint of that vehicle can be visually confirmed at all times. With this in mind, NTT DOCOMO has conducted a real-time video transmission experiment from a vehicle traveling on public roads and a remote vehicle operation experiment using the “variable transmission rate control function based on communication speed” to verify if the above requirement can be satisfied by using that function.

First, in the real-time video transmission experiment from a vehicle running on public roads, a vehicle mounted with a Full High Definition (FHD) camera was made to run at regulation speeds on public roads in Yokosuka City, Kanagawa Prefecture. At this time, the video captured by the camera was transmitted in real time to an NTT DOCOMO building in the same city over a public cellular circuit. The driving route was an LTE environment surrounded by mountains in which communication speed was constantly changing. Under such conditions, transmitting video with a fixed transmission rate would result in phenomena such as the occurrence of disturbances, breaks in the received video, or the complete freezing of video. When applying the variable transmission rate control function, however, resolution could be seen to drop while the vehicle passed through an area with insufficient communication speed, but it was confirmed that no breaks would occur in the received video.

Next, in the remote vehicle operation experiment, video of the surrounding environment of an experimental vehicle running on a vehicle test course in Kashiwa City, Chiba Prefecture was transmitted in real time to an NTT DOCOMO building in Yokosuka City over public cellular circuits including 5G. Then, while viewing the received video at that NTT DOCOMO building, an operator performed remote operation such as driving the vehicle straight ahead or making left turns (Photo 1). Although the vehicle was driven at very low speeds to conduct the experiment safely, the quality of the received video and the video delay including codec processing was found to be at a level creating no problems for remote operation. It was also confirmed that remote operation of the vehicle could be performed from distant locations [4].

2) Determining a Patient's Condition during Emergency Transport Activities

In recent years, the hospital admittance time in relation to emergency-vehicle services has been increasing as traffic in urban areas increases and the area under a hospital's jurisdiction expands due to the merging of outlying cities, towns and villages. To provide a patient being transported with appropriate medical treatment without delay, it is critical that a qualified medical professional obtain an understanding of the patient's condition as quickly as possible. At present, however, it is often difficult for a medical professional to obtain that understanding based on a vocal report from emergency-vehicle personnel, such as the extent of patient seizures. At NTT DOCOMO, we conducted a field experiment on transmitting in real time video showing the condition of a transported patient (mock patient) from a traveling emergency vehicle (mock vehicle) to the destination hospital using the variable transmission rate control function based on communication speed described above. Our aim here was to test the usefulness of being able to check on the condition of a patient in the middle of emergency transport.

Specifically, we transmitted video in real time from a mock vehicle traveling at regulation speeds on public roads in Shinshiro City, Aichi Prefecture to a hospital in the same city over public cellular circuits. We also interviewed medical professionals who viewed the received video. Since the route taken by an emergency transport vehicle cannot be decided beforehand, and we needed to check whether the transmission rate control function could be applied to all sorts of environments. So for the route taken by the mock vehicle, we selected one in which communication speed would fluctuate dramatically by including both an LTE area and 5G area and both a mountainous area and urban area. For the video targeted for evaluation, we interviewed a medical professional beforehand to find out what types of video would be needed to check on the condition of a transported patient. Based on the results of that interview, we captured and transmitted video on the mock patient's entire body, upper body, affected area, and ElectroCardioGram (ECG) using multiple 4K cameras for each view (Photo 2).

It was found that phenomena such as video interruptions and video freezing would occur when not applying the variable transmission rate control function, but when applying the function, while fluctuations in the quality of the received video could be observed depending on the communication speed, no interruptions in the video would occur. For the case of applying the variable transmission rate control function, we obtained comments such as “I was able to check on matters that are difficult to convey orally” and “Being able to understand the patient's degree of urgency beforehand makes it possible to select an appropriate transport destination and eliminate any harmful effects in treatment due to a mismatch with that destination.” On the other hand, even when applying the variable transmission rate control function, there were situations in which the transmission rate dropped below 1 Mbps resulting in a significant drop in the quality of the received video. In such cases, we heard opinions and comments to the effect that “Image quality is rough and determining the patient's condition can be difficult depending on the patient's symptoms”. We also heard “At present, the type of emergency treatment that emergency personnel can intervene with during transport is limited.” We therefore plan to study means of improving video quality and methods of contributing to emergency medical treatment both in the present and future.

3) Determining Disaster Conditions Using Drone Video

Large-scale natural disasters have been occurring frequently in recent years in regions throughout Japan causing tremendous damage. Providing immediate aid and restoration efforts requires that damage be assessed quickly, and to this end, the video obtained from cameras mounted on disaster-oriented drones is considered to be effective. The communication environment in the air is more severe than that on the ground, so real-time transmission by the variable transmission rate control function and the conversion of wide-area video captured from a distance into high-definition video are considered to be useful for quickly and safely grasping disaster conditions. At present, field experiments for this use case have yet to be conducted, but should be pursued in the future.

-

The future outlook for video use cases is shown in ...

Open

The future outlook for video use cases is shown in Figure 6. Up to now, video services have been expanding around high-definition video such as 4K and 8K, but in the 5G era, new video services making full use of 5G features are coming to be created for both consumers and enterprises. In fact, most use cases using current 5G are video-based services, and video use cases are expected to expand even further toward the 6G era. Specifically, the following three trends can be noted.

- Expansion of interpersonal services through the expansion of representation space and evolution of video representation

- Expansion of industrial use through evolution of stability and low latency

- Use in new areas such as sensing applications through evolution as a source of information

In any case, it is expected that the use of video will accelerate in providing high-realistic experiences, supporting highly mission critical*16 areas, etc. However, it should be pointed out that opportunities for applying video with even higher levels of definition such as 16K to “people” are limited. On the other hand, it is predicted that the use of such high-definition video by “things” will increase in new ways by becoming a source of sensor information, linking with AI, etc. and that its use will expand to new areas such as advanced use cases related to safety and security and the analysis of unknown worlds. The current video-transmission processing platform is positioned within the area bounded by the dashed line in Fig. 6, but at NTT DOCOMO, we are aiming for evolution toward the 6G era such as through studies on a cooperative estimation technique that uses base station information and terminal information as described earlier.

- Mission critical: A system for which the provision of services in a continuous manner is extremely important; an interruption due to a failure, etc. can cause great damage and cannot be allowed to occur.

-

This article described a function for transmitting video ...

Open

This article described a function for transmitting video data at an appropriate transmission rate tailored to the communication environment and a function for enhancing the quality of video data transmitted in compressed form as video transmission/processing technologies that make effective use of the communication band. It also described field experiments using these functions and discussed the future outlook for video use cases. In future video use cases, we can expect to see accelerated provision of highly realistic experiences and increased use of video in highly mission critical areas all under a variety of limitations in wireless communications. The functions introduced in this article are considered to be useful in such use cases, but as described earlier, some issues are being identified. Going forward, we plan to work on solving those issues and to extend our video-transmission processing platform by conducting more field experiments and coordinating with various types of analysis technologies toward the use of video in new application areas.

-

REFERENCES

Open

- [1] T. Higuchi, N. Shimizu, H. Shingu, T. Miyagoshi, M. Endo, H. Asano, Y. Morihiro, and Y. Okumura: “Video Sending Rate Prediction Based on Communication Logging Database for 5G HetNet,” 2018 IEEE 87th Vehicular Technology Conference (VTC Spring), Jun. 2018 (DOI: 10.1109/VTCSpring.2018.8417825).

- [2] S. Floyd, M. Handley, J. Padhye, and J. Widmer: “TCP Friendly Rate Control (TFRC): Protocol Specification,” RFC5348, Sep. 2008.

- [3] Z. Li, A. Aaron, I. Katsavounidis, A. Moorthy, and M. Manohara: “Toward A Practical Perceptual Video Quality Metric,” THE NETFLIX TECH BLOG, Jun. 2016.

- [4] T. Maruko et al.: “NTT DOCOMO Initiatives toward Cooperative Automated Driving Using C-V2X,” NTT DOCOMO Technical Journal, Vol. 25, No. 3, Jan. 2024.