Special Articles on XR—Initiatives to Form an XR Ecosystem—

NTT XR Studio—A Studio for Capturing, Editing, and Distributing XR Content

Volumetric Video Motion Capture Contents Distribution

Momoko Abe†1, Yohei Fujimoto†2, Sadaatsu Kato†2, Naoto Matoba†2, Kazuyuki Yoshiyama†2 and Eiichi Asakawa†2

Communication Device Development Department

†1 Currently, Product Design Department

†2 Currently, NTT QONOQ, INC

Abstract

Establishing technologies for content production, editing, and distribution has become a key factor in the popularization of XR services. In January 2021, NTT DOCOMO opened the NTT XR Studio, a studio dedicated to XR capturing editing, and distribution, in the Telecom Center Building in the Odaiba district, Tokyo. In this article, the facilities of this studio and the technology and features of each system installed in the studio are described, and actual examples of its use are presented.

01. Introduction

-

In recent years, establishing content production, ...

Open

In recent years, establishing content production, editing, and distribution technologies has become a key factor in popularizing eXtended Reality (XR) services. In particular, the technologies for representing a person in three dimensions as an avatar and capturing volumetric video*1 (which stores a person’s appearance and movements as 3-Dimensional (3D) video images as is) are essential for representing people—including oneself—in services that utilize XR space. Moreover, implementing a one-stop service that integrates everything from editing to distribution of content for XR experiences that has been captured or acquired from external sources will enable the smooth introduction of services for production and distribution of XR content.

In January 2021, NTT DOCOMO opened NTT XR Studio, a studio dedicated to capturing, editing, and distribution of XR content, in the Telecom Center Building in the Odaiba district, Tokyo. The studio is equipped with a series of systems for capturing volumetric video and editing and distributing the recorded content. We have also built a motion capture system for capturing and distributing the movements of a person in real time. In this article, the technologies and features of each of the systems installed in this studio are described, and examples of their use to date are presented.

- Volumetric video: 3D moving-image data of a person or object. It consists of shape data and surface-texture (see *2) data of the object.

-

NTT XR Studio is equipped with state-of-the-art equipment for creating ...

Open

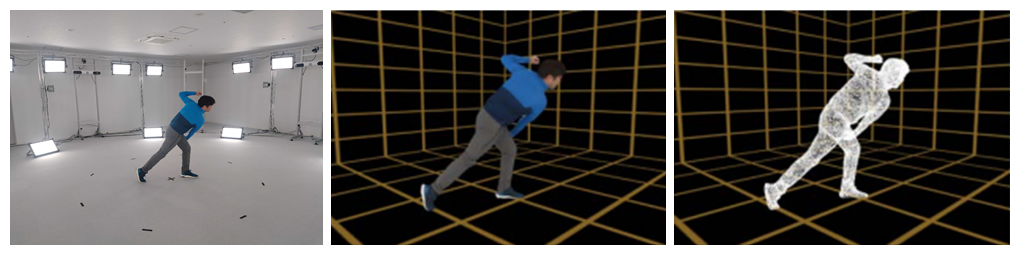

NTT XR Studio is equipped with state-of-the-art equipment for creating volumetric video, namely, 3D images in digital space reconstructed from objects captured from multiple angles. Among those pieces of equipment, particularly distinctive is the video capturing equipment called “TetaVi Studio” by TetaVi Ltd. (Israel), which does not require the green screen (a single green background) that is normally required when capturing volumetric video. That feature is due to the fact that in addition to comprising conventional cameras that capture 3D texture*2, this equipment utilizes technology for acquiring depth information*3 (i.e., distance to the subject) in a manner that makes it possible to extract the area around the person being captured (Figure 1).

With a system consisting of the above equipment, the subject to be captured is mainly (1 or 2) people, and it is possible to capture the subject within a virtual cylinder with a diameter of 2.9 m and a height of 1.8 m. The system can capture not only still images but also moving images, and when the subject is moving, 3D data is recorded in a time series. The captured 3D data can be viewed from any direction (i.e., field of view of 360 degrees), and it is possible to zoom in and out by adjusting the viewing position back and forth. Moreover, the system can be moved and installed indoors, where brightness can be controlled by lights, and it can also capture the subject with theatrical stage sets and sports equipment in the background without being restricted.

An example of using this system is the viewing of volumetric video by using WebAR (Figure 2). By using a smartphone browser to superimpose volumetric-video data of a person on a background image captured with an external camera, it is possible to make it appear as if the captured person exists in your presence, for example, by placing the person on the palm of your hand. When the viewer points the smartphone at an arbitrary space, the volumetric-video content is displayed on the screen of the smartphone. By changing the angle and distance of the smartphone to the space, the viewer can feel as if they are moving around or approaching the person in the volumetric-video image(s)—an experience that is unique to XR content.

- Texture: An image pasted on the surface of an object to express the texture of the object surface in 3D format data.

- Depth information: Distance (depth) from the camera position to the subject.

-

In addition to featuring the above-described volumetric-video ...

Open

In addition to featuring the above-described volumetric-video capturing equipment, NTT XR Studio is equipped with a volumetric-video distribution system and player that support a variety of devices, which enables the studio to provide a one-stop-shop service from capturing to content distribution.

3.1 Issues Concerning Distribution of Volumetric Video

Volumetric video can express free-viewpoint 3D images with a sense of presence and realism; however, the amount of information is larger than that concerning 2D video, so the file size is also larger. As a result, when volumetric-video content is played back by downloading it, the time it takes from content selection to playback is longer, and that extra time may impair the user experience. For that reason, to date, content delivery using volumetric video has mainly been for delivery of short content with small file sizes.

To deliver long content with large file sizes without compromising the user experience, it is necessary to use the streaming-delivery method used for 2D video content. Streaming-delivery method divides the content file into smaller files along a time line and stores those files on the server. A video player begins playback when the file at the beginning of the content is completely downloaded, and it continues playing the content while downloading the subsequent time-series content. Unlike the conventional method of video downloading (which downloads all the content files before starting playback), the streaming-delivery method starts playback as soon as the small file at the beginning of the content is downloaded. Consequently, the time required to start playing the content is reduced. However, the file format of volumetric video differs from that of 2D video, so the streaming-delivery method used for 2D video cannot simply be applied to volumetric video, which thus needs a new delivery method.

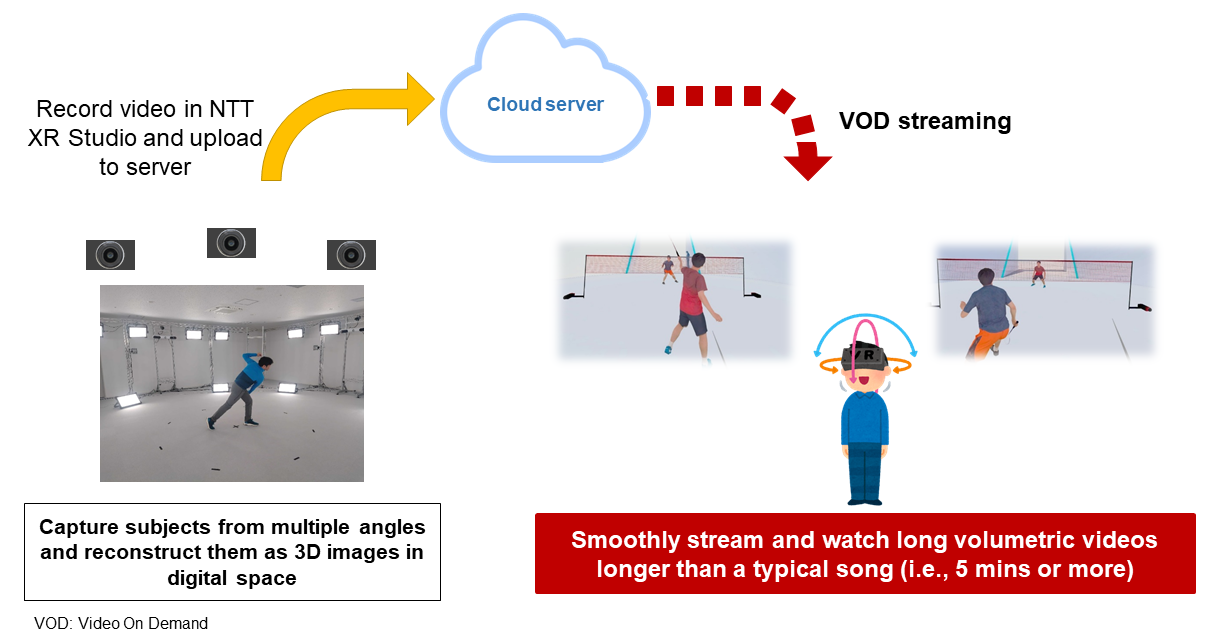

3.2 Streaming Delivery of Volumetric Video

To solve the aforementioned issue, NTT DOCOMO—with technical cooperation from Arcturus Studios Holdings, Inc. [1]—has developed a streaming-delivery technology for long content and a technology for optimizing bit rate during streaming while maintaining a set level of content quality (Figure 3). First, the subject is recorded as 3D data by using the aforementioned volumetric-video capturing system. The 3D data is then converted to data for streaming distribution and uploaded to a cloud server. Users can view the volumetric-video content by accessing the server from a device equipped with a volumetric-video player. The ability to stream long, high-quality volumetric-video content is expected to reduce playback costs for users and broaden the range of content to be distributed. The volumetric-video player using these technologies is compatible with a variety of devices, including Virtual Reality (VR) head-mounted displays*4, Mixed Reality (MR)*5 devices, smartphones, and PCs. It thus enables users to view volumetric-video content in a variety of settings regardless of location or application.

As for another issue, a unified file format for volumetric video has not been stipulated, and different file formats are used for different types of equipment. The above-described streaming-delivery technology for long content and technology for optimizing bit rates enable streaming delivery of volumetric video regardless of capturing equipment or file format by utilizing a dedicated editing tool that supports input of multiple typical volumetric-video file formats.

- VR head-mounted display: A generic term for a display device worn on the head. Two types are available: a monocular type that presents images to only one eye, and a binocular type that presents images to both eyes. Typical examples are glasses or goggles that present images on the lens.

- MR: Technology for superimposing digital information on video taken of the real world and presenting the result to the user. In contrast to AR, MR makes information appear as if it is actually there in the real world from any viewpoint.

-

NTT XR Studio is equipped with optical motion-capture equipment. ...

Open

NTT XR Studio is equipped with optical motion-capture equipment. Motion capture is a technology for acquiring digital data about the movements of people and objects in real space. It is widely used for motion analysis in the sports and medical fields as well as for entertainment purposes, such as the production of Computer Graphics (CG) for movies and games.

4.1 Optical Motion Capture

Optical motion capture is a system that acquires 3D data of the motion of a subject to be captured. Markers are first attached to each part of the subject to be captured, and the positions of the markers are captured with multiple cameras and the 3D position of each marker is estimated. When the subject is a person, the joint positions and angles of the captured subject can be estimated by comparing the obtained 3D positions of the markers on each part of the subject’s body with a predefined human-body model. Although optical motion capture requires a relatively large space for housing multiple cameras, it offers higher detection accuracy than other methods and does not greatly restrict the motion of the captured subject.

4.2 Production of Entertainment Content by Using Motion Capture and Live Distribution of That Content

As mentioned above, motion capture is widely used in entertainment applications such as the production of CG images for movies and games. Especially in the entertainment industry in recent years, 2D video images produced by using motion capture and 3D CG has been widely streamed live. Such images are created by manipulating 3D CG avatars in real time on the basis of the movements of performers captured by using motion capture. By operating high-quality 3D CG avatars in real time, it is possible for performers and users to communicate in real time in a manner unique to live performances.

For 2D video streaming using motion capture and 3D CG, 3D CG rendering is generally done by the distributor, and the rendering results are captured as 2D video and distributed to users. The main reasons for this state of affairs are twofold: (i) the large processing load of 3D CG rendering from the perspective of the devices used by users to view content and (ii) the fact that few devices are able to support viewing of 3D content. In recent years, however, devices specialized for viewing 3D content, such as VR head-mounted displays and MR devices, have become increasingly popular, and some of them are capable of 3D CG rendering in real time. Since demand for 3D-content delivery that can provide users of these devices with a richer experience than 2D video is growing, it is necessary to meet that demand.

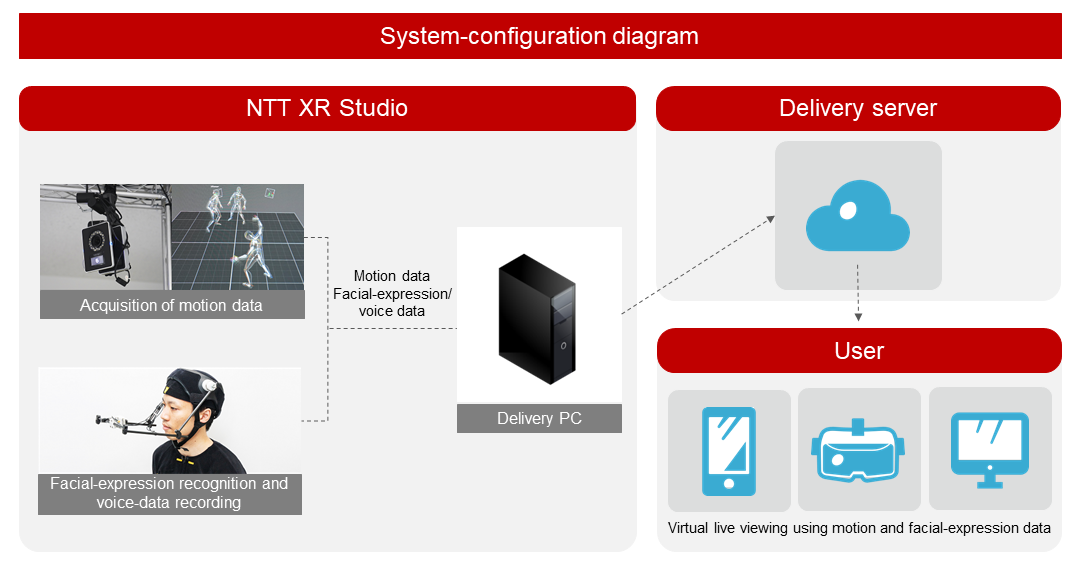

4.3 Live Distribution Technology for Motion Data Acquired by Motion Capture

To satisfy the aforementioned demand and provide users with 3D CG content by using motion capture, NTT DOCOMO has developed a system that distributes motion data acquired by motion capture live to the user’s device together with voice data (Figure 4). The system immediately processes the acquired motion and voice data on the delivery PC and uploads the data to the delivery server. The player application installed on the user’s device then successively receives the data from the delivery server as it is updated. 3D CG avatar data is sent to the player application in advance, and an avatar is rendered according to the motion data received by the device. As a result, the user is provided with 3D content with a sense of presence and realism.

The system adopts a method that is commonly used for streaming of 2D video as a streaming protocol for motion-capture data so it can implement existing file-encryption methods for streaming and handle large-scale streaming using content-delivery networks*6.

In addition to streaming motion-capture data, the system can also stream facial-expression data acquired in real time through facial-expression analysis and voice data acquired by microphone. When these data are received on the user’s device and the received movement, facial-expression, and voice data are added to the 3DCG avatar data stored on the user’s device, the user can view rich 3DCG live content from any perspective.

- Content-delivery network: A network for high-speed, large-scale delivery of content.

-

In this article, the facilities of the NTT XR Studio and the features of ...

Open

In this article, the facilities of the NTT XR Studio and the features of each system installed in the studio for capturing, editing, and distribution were described, and examples of their use were presented. NTT DOCOMO provides an all-in-one environment covering video capture to streaming at its own XR studio, and opens this environment to external partners for collaborative creation while obtaining feedback from content developers inside and outside the company. In that way, we hope to promote the smooth production and dissemination of XR content.

-

REFERENCES

Open

- [1] ARCTURUS: “Powers volumetric video.”

https://arcturus.studio/

https://arcturus.studio/

- [1] ARCTURUS: “Powers volumetric video.”