Special Articles on XR—Initiatives to Form an XR Ecosystem—

Spatial-AR Development Package Enabling One-stop Development of AR Services Linked to Spaces

Spatial-recognition AR AR-content-development Tool AR Cloud

Takuro Kuribara†, Ryosuke Kurachi†, Taishi Yamamoto†, Osamu Goto† and Shinji Kimura†

Communication Device Development Department

† Currently, Smart Life Business Strategy Department, Smart Life Business Company

Abstract

In recent years, spatially aware AR (location-based AR) services using smartphones and glasses-type devices have started to appear. The challenge for providing spatially aware AR services is that many technologies and systems, such as self-location estimation and AR-content management and distribution, are required, and the threshold for providing such services is high for service providers. Aiming to lower that threshold, NTT DOCOMO has therefore developed a spatial-AR development package. This package enables one-stop development of spatial AR services in a shorter time and at a lower cost than conventional development, which requires an assortment of necessary technologies and systems.

01. Introduction

-

In recent years, spatially aware Augmented Reality (AR) ...

Open

In recent years, spatially aware Augmented Reality (AR) services*1 using smartphones and glasses-type devices have started to appear. Spatially aware AR services can provide users with AR experiences linked to the space around them. They are thus expected to be used for applications such as providing digital information (such as virtual advertisements and coupons) and entertainment content in specific spaces such as real-world towns and commercial facilities. However, the threshold for providing spatially aware AR services is higher than that for providing vision-based AR services because it is necessary to use multiple technologies and systems, including technologies for acquiring data in real space for constructing a digital twin*2, technologies for arranging AR content linked to that space, and setting up servers for sharing and distributing AR content.

Aiming to lower that threshold, NTT DOCOMO has been researching and developing the AR Cloud*3 [1], and by utilizing the results of this research and the knowledge gained through demonstration experiments, we have developed a one-stop development package for creating spatial AR services.

This package provides the technologies and systems necessary for developing spatially aware AR content with a single package. As a result, compared to conventional development—which requires a complete set of necessary technologies and systems—development with this package enables service providers to offer AR services in a shorter time and at a lower cost. By using this package, service providers can develop AR services that provide the following AR experiences:

- Shared AR experiences on a variety of devices (including glasses type)

- AR experiences that switch advertisements, coupons, and display language—in the same space—according to user attributes

- Sharing interactive content among friends and family

- AR experiences using real-time information acquired by Internet of Things (IoT) sensors (e.g., motion sensors)

The distinctive technologies that comprise the spatial-AR development package developed at NTT DOCOMO are described hereafter.

- Spatially aware AR service: An AR service that registers AR content in a specific location and displays it superimposed on real space at that location (through an AR device) for visitors to that location.

- Digital twin: Places, objects, and events that exist in the real world are reproduced (from various forms of data) as “digital twins” as if they were twins in a digital space.

- AR Cloud: A technology platform that superimposes AR content on a real space and enables interactive sharing of common AR content among multiple devices.

-

The configuration of the developed spatial-AR-development package is ...

Open

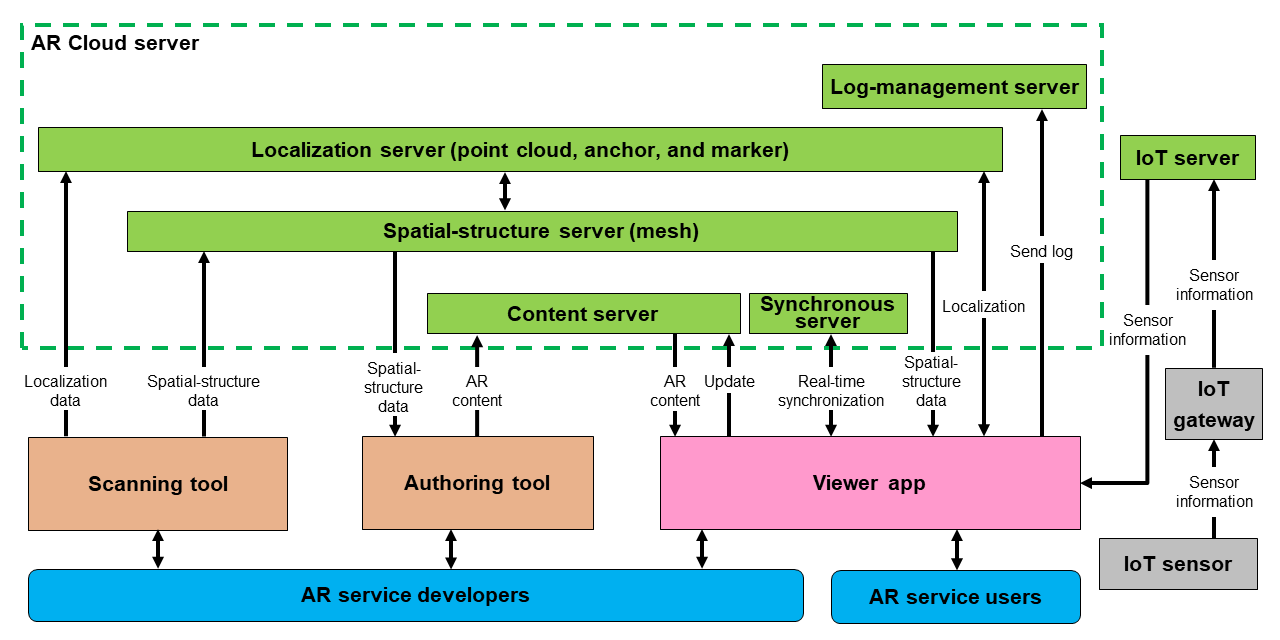

The configuration of the developed spatial-AR-development package is shown in Figure 1. The package consists of the following functional blocks: a scanning tool for building a digital twin, an authoring tool*4 for developing AR content, an AR cloud server for distributing and sharing AR content, and a viewer application for experiencing the developed AR content. The AR cloud server consists of a self-location estimation server (hereinafter referred to as “a localization server”), a spatial-structure server, a content server, a synchronous server, and a log-management server. The three-step flow of development of AR services by using each functional block is summarized as follows:

- The developer uses the scanning tool to acquire spatial data in real space. Two types of data are acquired: (i) information used for estimating self-location (point clouds and anchors*5) and (ii) spatial-structure information (mesh*6) used for developing AR content. After unifying the coordinate systems*7 for each set of data (i) and (ii), the developer stores the acquired data in the localization server and the spatial-structure server, respectively.

- The developer uses the authoring tool to develop AR content. The authoring tool determines the location to place the AR content by referencing spatial-structure information retrieved from the spatial-structure server. The AR content is stored on the content server.

- Running on a variety of devices, including glasses types, the viewer application obtains AR content and information used for estimating self-location from the localization server, the spatial-structure server, and the content server. Users experience the developed AR services through the viewer application.

In this flow of development of AR services, the most-important technical elements to consider are “localization technology,” “development of AR content,” and “AR-content management and distribution technology.” These elements are described below.

2.1 Localization Technology

The localization technology estimates the location and orientation of an AR device in real space. It allows AR content to be superimposed on arbitrary locations in real space. Since spatially aware AR services require higher accuracy than services using the Global Positioning System (GPS), i.e., the most-common positioning system (with an error margin of about 10 m), the development package uses positioning using camera images. To enable the use of the most-appropriate technology for estimating self-location according to the location of the image captured by user’s camera, this package supports three technologies for multiple estimations of self-location from camera images: marker-based, anchor-based, and point-cloud-based.

- Marker-based technology: The developer registers a reference image (marker) in advance and estimates its position when the marker is recognized by the user’s camera. This technology is intended for simple applications such as displaying AR objects related to images.

- Anchor-based technology: (i) a picture of the area where the developer wants the AR content is to be placed in advance is taken and registered as an anchor and (ii) its location is estimated when the anchor is recognized by the user’s camera. This technology is intended to be used to provide AR experiences in a narrow space.

- Point-cloud-based technology: self-location is estimated by comparing feature points acquired by the user’s camera against a “feature-point map” previously obtained by the developer. This technology is intended to be used to provide AR experiences over a wide area. The estimation procedure is summarized as follows: (i) a feature-point map is created by capturing a wide area (including the place where the developer wants to place the AR content) with the user’s camera in advance; (ii) the images captured by the user’s camera are sent to the localization server periodically (about once every few seconds) via the viewer application; (iii) the localization server extracts the feature points of the images sent from the viewer application; (iv) the self-location is estimated by matching the feature points with the feature-point map.

2.2 Development of AR Content

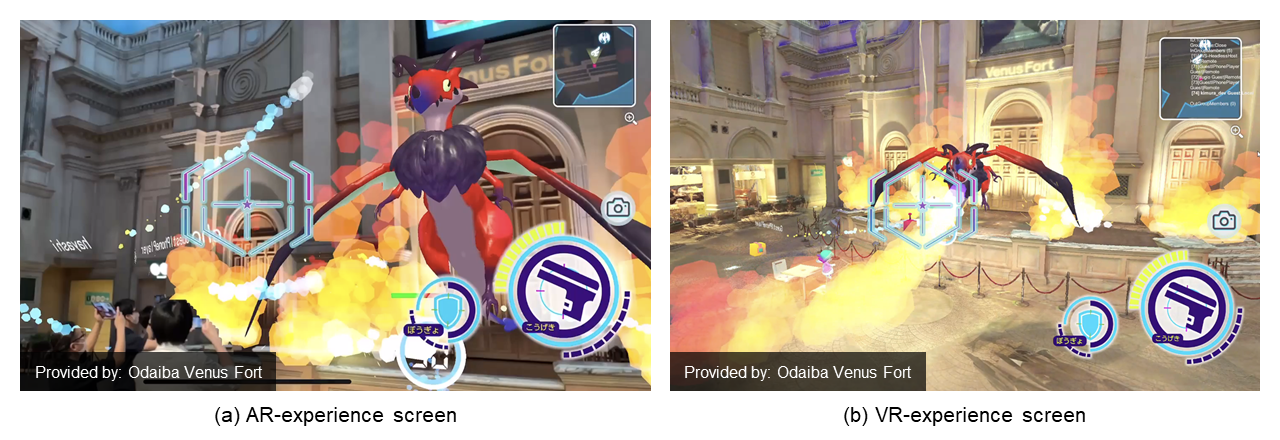

AR content is developed by using the authoring tool, an application that runs on a PC provided by this package. Developers use the authoring tool to (i) retrieve spatial-structure information (mesh) stored on the spatial-structure server and (ii) develop and arrange AR content associated with the spatial-structure information. To reduce the difficulty of developing AR content, this package employs visual scripting*8 so that non-programmers can also develop AR content. While developing AR content generally requires programming knowledge, the package enables non-programmers to develop AR content by simply connecting the provided nodes*9 by using visual scripting. Complex functions such as sharing location information between viewer applications and sharing AR content among multiple devices are provided as nodes in a way that makes it easy to develop multiplayer and network-linked content. We developed “Dragon Battle,” AR content that uses these synchronization functions, and presented it in a demonstration experiment conducted in March 2021 [2], as shown in Figure 2(a). This AR content allows multiple users to simultaneously fight a dragon, and it reflects the locations and actions of each user in real time. We also developed a function that allows users to experience the same space as that containing the AR content in real time in Virtual Reality (VR) and presented it in a demonstration experiment conducted in July 2021 [3], as shown in Fig. 2(b). This function allows users to tour the venue from remote locations and enjoy online survival games such as Dragon Battle together with local AR experience users. We demonstrated that by utilizing these functions, it is possible to not only provide on-site AR experiences but also remote VR experiences via a PC and VR goggles. In that way, the package makes it possible to develop an Extended Reality (XR) space in which users can share the same experiences regardless of location.

Since AR content is linked to real space, it is desirable to be able to check and adjust AR content that are under development and located in that space. The authoring tool enables such actions by interfacing with the viewer application and content server. The developer adjusts the location of the AR content while actually displaying them in the field with the viewer application, and saves the results in the content server. The developer can then retrieve and update the location information of the AR content from the content server adjusted on the authoring tool. The authoring tool also incorporates a draft function. AR content saved as a draft is displayed only in the developer’s viewer application, and it does not affect AR content that has already been released. By using the draft function, it is therefore possible to adjust the AR content easily.

2.3 AR-content Management and Distribution Technology

The AR-content management and distribution technology makes it possible to manage and distribute AR content linked to objects and locations. The content server of the package manages the AR content and distributes it to the viewer application.

In the case of a typical application, changing AR content requires rebuilding and reinstalling the application. On the contrary, our development package allows AR content to be managed by both the authoring tool and the viewer application; consequently, AR content registered by the authoring tool can be immediately checked by the viewer application without the need to rebuild the application. The viewer application supports a variety of device environments and can currently be distributed to iOS, Android, MagicLeap, and Windows (VR) devices. The viewer application can also output various logs; accordingly, detailed circulation logs tracing the results of localization and the number of views of AR content can be stored on a log-management server and retrieved by the developer.

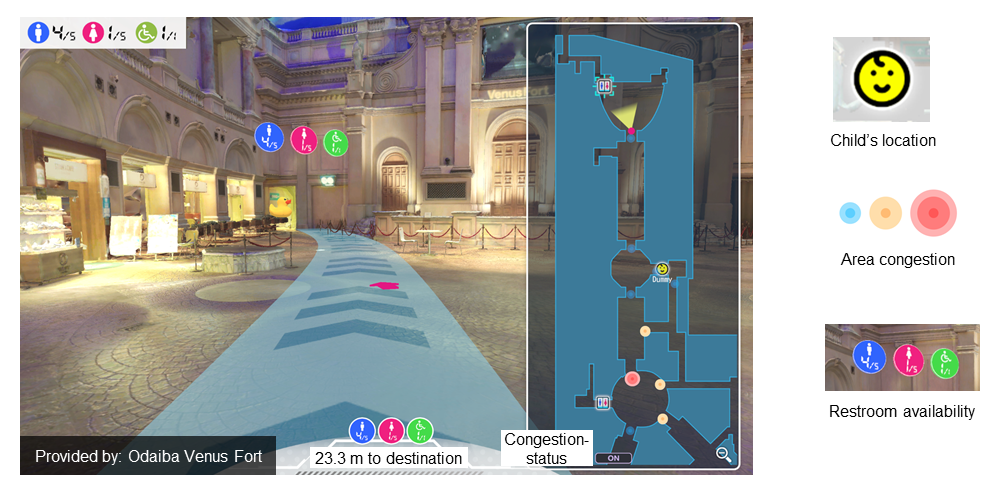

The package also provides a node that works with IoT sensors that sense the environment (e.g., motion sensors, beacons, open/close sensors, etc.). Sensor information is collected via an IoT gateway*10 and distributed to the viewer application. As shown in Figure 3, by linking with sensors, it is possible, for example, to display real-time AR information on the congestion in a museum area, the location of children carrying beacons, and the availability of restrooms.

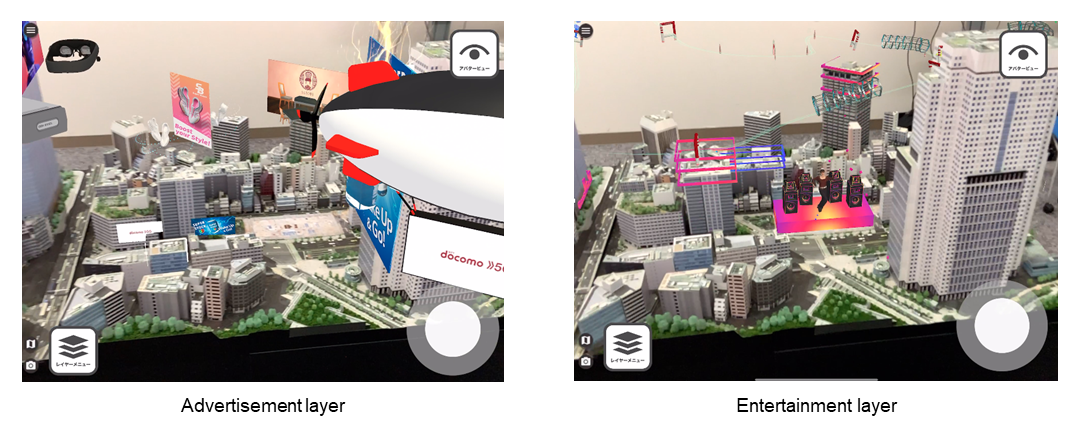

AR content is managed in units of layers, and the viewer application can display multiple layers simultaneously or switch between them. An example of displaying different AR content in the same space by switching layers is shown in Figure 4. This layer function allows for a multi-layered spatial representation unique to AR.

- Authoring tool: Application software for editing different types of data, such as text and images, to create a single piece of software or content. In this article, it refers to the proprietary application software of the spatial-AR development package for creating AR content.

- Anchor: Information that indicates the location in reality to be used as a reference when arranging AR content.

- Mesh: Spatial-model data that represents the shape of a three-dimensional object by means of a set of triangles, quadrilaterals, and other data.

- Coordinate systems: A system comprising an origin and coordinate axes for representing arbitrary coordinates. The combination of coordinates and coordinate system allows representation of unique points.

- Visual scripting: A method of describing various kinds of logic by combining and connecting visually comprehensible objects (nodes (see *9)).

- Node: A component used in visual scripting. Nodes are combined to form logic.

- IoT gateway: An intermediate device that has functions such as protocol conversion and data transfer to allow communications between devices. In this article, it refers to a specific device developed to collect and relay data to be sent from sensors to the server.

-

A spatial-AR development package that enables one-stop development of ...

Open

A spatial-AR development package that enables one-stop development of AR services linked to spaces was described in this article. The main technical elements of the package are “localization technology,” “development of AR content, and “AR-content management and distribution technology.” At NTT DOCOMO, we plan to continue to develop AR services using this package while continuously improving and updating its functions on the basis of user feedback. We hope that this package will help AR services become more widespread.

-

REFERENCES

Open

- [1] K. Hayashi et al.: “AR/MR Cloud Technology to Provide Shared AR/MR Experiences across Multiple Devices,” NTT DOCOMO Technical Journal, Vol. 22, No. 4, pp. 43-44, Apr. 2021.

https://www.docomo.ne.jp/english/binary/pdf/corporate/technology/rd/technical_journal/bn/vol22_4/vol22_4_006en.pdf (PDF format:1,762KB)

https://www.docomo.ne.jp/english/binary/pdf/corporate/technology/rd/technical_journal/bn/vol22_4/vol22_4_006en.pdf (PDF format:1,762KB) - [2] Mori Building Co., Ltd. and NTT DOCOMO: “Venus Fort AR Demonstration Experiment,” Mar. 2021 (in Japanese).

https://www.docomo.ne.jp/binary/pdf/info/news_release/topics_210309_00.pdf (PDF format:0)

https://www.docomo.ne.jp/binary/pdf/info/news_release/topics_210309_00.pdf (PDF format:0) - [3] Mori Building Co., Ltd. and NTT DOCOMO: “Demonstration Experiment to Provide Interactive XR Experience at Odaiba Venus Fort,” Jul. 2021 (in Japanese).

https://www.docomo.ne.jp/binary/pdf/corporate/technology/rd/topics/2021/topics_210706_00.pdf (PDF format:1,060KB)

https://www.docomo.ne.jp/binary/pdf/corporate/technology/rd/topics/2021/topics_210706_00.pdf (PDF format:1,060KB)

- [1] K. Hayashi et al.: “AR/MR Cloud Technology to Provide Shared AR/MR Experiences across Multiple Devices,” NTT DOCOMO Technical Journal, Vol. 22, No. 4, pp. 43-44, Apr. 2021.